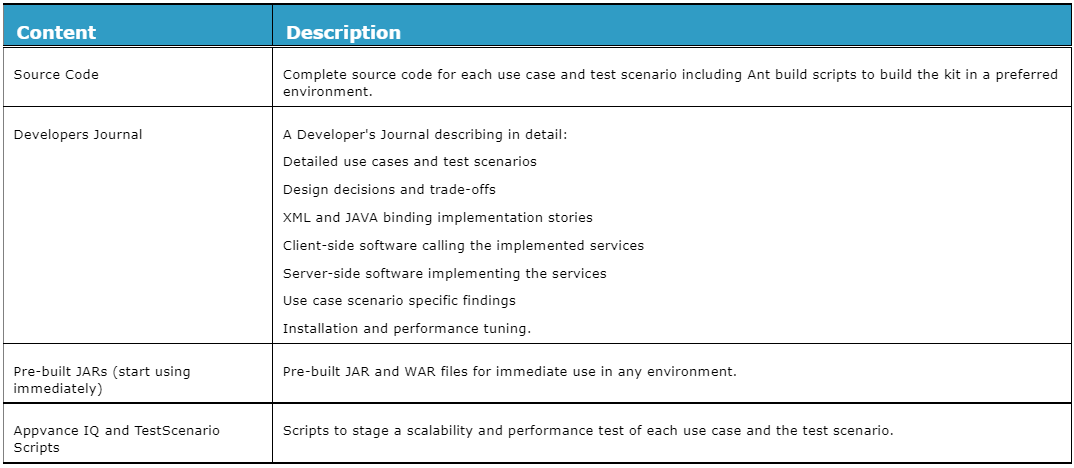

Scalability and PerformanceKit Contents

Definitions: for Use Case and Test Scenario

Use the Appvance methodology to measure soap binding performance and scalability of bindings created and deployed using J2EE-based tools and XQuery and native XML database. Performance testing compares several methods to receive a soap-based Web Service request and responds to it. Scalability testing looks at the operation of service as the number of concurrent requests increases. Performance and scalability tests measure throughput as TPS at the service consumer.

The use cases and test scenarios contrast the TPS differences between the two most popular approaches to parse XML in a request, based on the following experiences:

-

A standard service test method for Web Services has not emerged. For instance, the SPECjAppServer1 test implements a 4-tier Web browser-based application where a browser connects to Web, application, and database servers in series. Internet applications, on the other hand, are truly a multi-tier architecture where each tier can make multiple soap requests to multiple services and data sources at any time. SPECjAppServer and similar 4-tier tests do not provide reliable Internet application information needed by capacity planners and software architects.

-

Software architects and developers specialize in service types. For instance, one developer works with complicated XML schemas in order processing services while another concentrates on building content management and publication services in portals

-

The tools, technologies, and libraries available for software architects change rapidly

Responding to these issues, the kit uses cases common to many SOA environments. These use cases highlight different aspects of SOA creation and present different challenges to the software development tools examined.

-

Compiled XML binding using BOD schemas. In this scenario, codenamed TV Dinner, a developer needs to code a part ordering service. The service uses Software Technology in Automotive Retailing (STAR) Business Object Document (BOD) schemas

-

On the consumer side, the test code instantiates a previously serialized Get Purchase Order (GPO) request document and adds a predetermined number of part elements to the ordered part. On the service side, the service examines only specific elements within the GPO instead of looking through the entire document

-

The developer's code addresses compartments by their namespace so they add/put only the changing parts of the purchase order. The other compartments (company name, shipping information, etc.) do not change from one GPO request to another. To accomplish this, the TV Dinner uses JAXB-created bindings allowing access to the individual compartments. This XML to object binding framework is used so only the required objects are instantiated

-

The TV Dinner scenario is named because, in a TV dinner, the entire dinner is delivered at once while the food is in compartments

-

Streaming XML (StAX) Parser. In this scenario, codenamed Sushi Boats, a developer builds a portal receiving a "blog" style news stream. Each request includes a set of elements containing blog entries. The test code scenario parameters determine the number of blog entries included in each request. The developer needs to take action on the entries of interest and ignore the others. The test code for the Sushi Boats features the JSR 173 Streaming XML (StAX) parser

-

The Sushi boat scenario is named from observations at a Japanese Sushi Bar where the food passes by in a stream and the diner selects the food they take from a selection of boats

-

DOM Approach. In this scenario, codenamed Buffet, a developer writes an order validation service receiving order requests and must read all the elements in a request to determine its response. The test code scenario parameters determine the number of elements inserted into each request. The test code for the Buffet scenario uses Xerces DOM APIs

-

The Buffet JAVAscenario is named from experience eating at a buffet restaurant and feeling compelled to visit all the stations

In addition to these use cases, the kit contrasts database performance differences between native XML databases and relational databases and stores XML data containing complex schemas and multiple message sizes in the mid-tier. The kit implements these use cases using both JAVA and XQuery tools.

Defining the Test Scenario

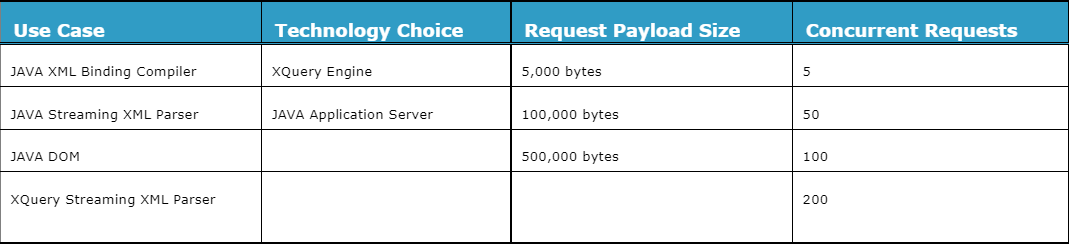

The Test Scenario is the aggregate of all use and test cases. For instance, the kit implements several use cases showing different approaches to XML parsing (DOM, XQuery, StAX, and the Binding Compiler). Therefore, running four use cases with two message sizes results in eight test cases in the test scenario. The Test Scenario lists the four use cases: 2 technology choices, 3 message payload sizes, and 4 concurrent request levels.

The Test Scenario

The test scenario is the aggregate of all the test cases. For instance, one test case uses the XML Binding Compiler running on an XML database at 100,000 bytes and 50 concurrent requests. This test scenario requires 96 test cases to run, given these parameters.

If each test case has a 5-minute warm-up period, takes 5 minutes to run, and has a 5-minute cool-down period, the test scenario requires 1,440 minutes (24 hours) to run. Seeing how run time can increase significantly as the number of use cases increases, use caution when adding use cases to a test scenario; add the use cases if the resulting actionable knowledge is necessary.