Understanding TPS

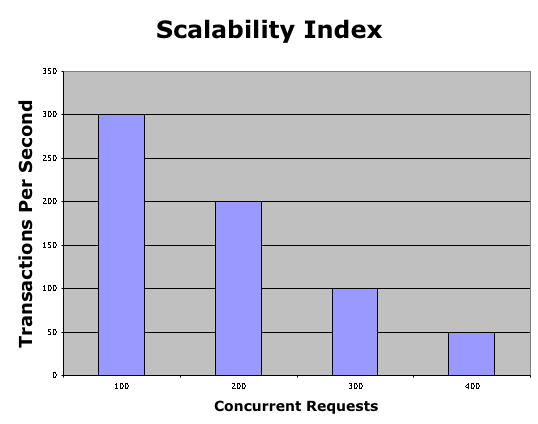

Transactions Per Second (TPS) can be measured and may be counterintuitive. Appvance IQ shows a system’s Scalability Index chart shown in Test Results of a System’s Throughput at 4 Concurrent Requests Levels.

Test Results of a System’s Throughput at 4 Concurrent Requests Levels

System throughput is measured as the number of transactions a system handles as more requests are received. A perfect information system handles requests at a constant rate regardless of the number of requests; it increases its transactions per second to maintain a constant response time. Charting a perfect system's scalability shows the TPS rate increases in equal proportion to the number of received requests; it is a linear relationship.

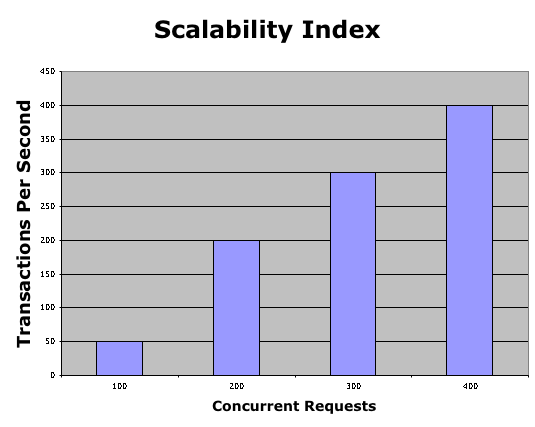

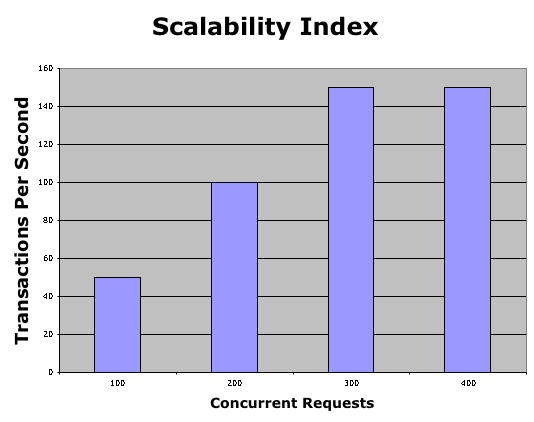

For instance, if a perfect system handles 100 concurrent requests in 10 seconds with a 2 second response time, then maintaining the 2 second response time, it should handle 200 concurrent requests in the same 10 second period. The scalability Index for a Perfect System shows the Scalability Index of a perfect system - a system with linear scalability.

Scalability Index for a Perfect System

At each concurrent request level, the system handles them at a measured rate in transactions per second, yielding the system's response time. As concurrent request levels increase, the system handles the requests at the same rate so the number of transactions increases in equal proportion. TPS keeps going up and to the right in equal proportion to the number of requests.

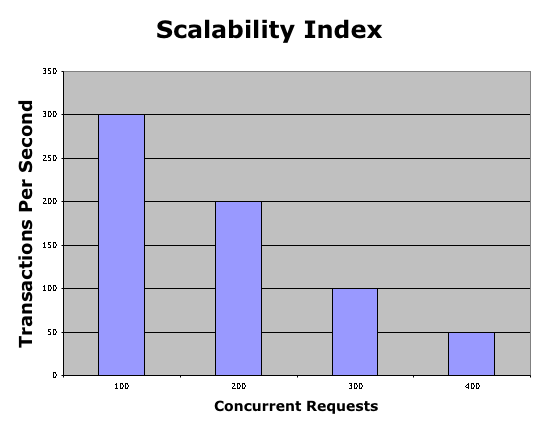

For instance, with 100 concurrent requests, a system handling 1000 requests in a 10 second period yields a handling rate of 100 transactions per second. The same system at 200 concurrent requests handles 2000 requests in the same 10 second period increasing the handling rate to 200 transactions per second. That is perfect scalability – the Holy Grail of performance testing. Receiving more requests does not slow down the overall system response time. However, a typical system hitting a bottleneck has the performance shown in A Service Exhibiting a Performance Bottleneck.

A Service Exhibiting a Performance Bottleneck

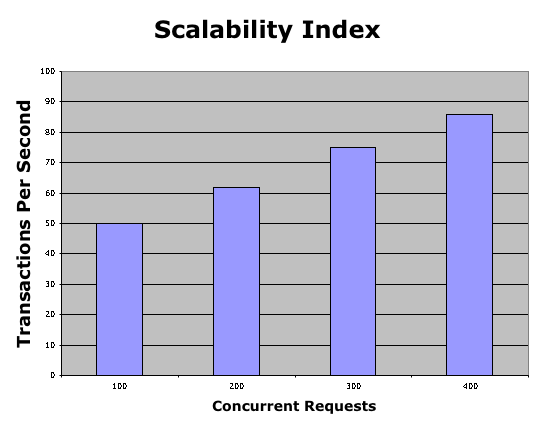

As the system receives a larger number of concurrent requests it slows down when responding to each request. Increasing past 400 concurrent requests, the system would eventually reach zero transactions per second. Many systems checked for scalability have this problem. The Scalability Index helps system managers plan system capacity to achieve the desired throughput needed to keep user efficiency constant while helping developers understand how their design and coding decisions impact performance. However, the situation depicted in A Service Exhibiting a Scalability Problem is more typical of what is observed.

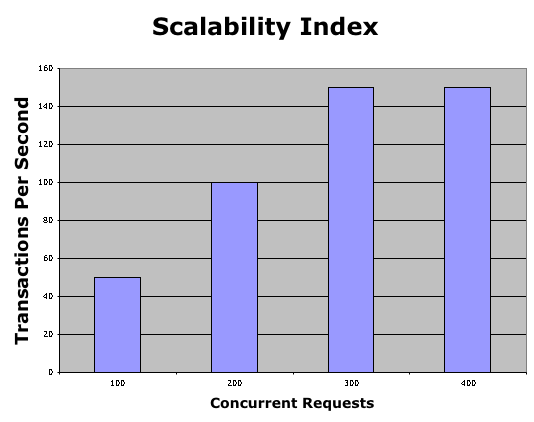

A Service Exhibiting a Scalability Problem

As shown in the first three columns, the system is capable of handling an increased level of concurrent requests. However, the fourth column shows the system hits an upper limit in handling transactions, typically resulting from a database indexing problem, a full data cache, or a saturated network connection.

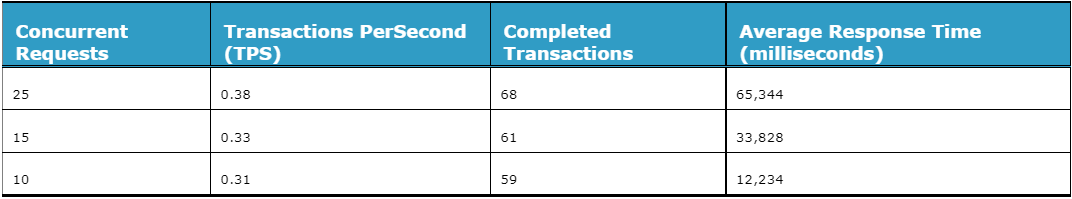

This simple TPS method can be convoluted as shown by the results shown in the following scalability test in Parameter Values Required to Calibrate a Test.

Parameter Values Required to Calibrate a Test

From the results in Parameter Values Required to Calibrate a Test TPS increases only slightly considering the test is making 2.5 (25 / 10) times more concurrent requests. The TPS value should have increased by 2.5 times to 0.775 (0.31 TPS at 10 concurrent requests times 2.5). Free-running threads generate concurrent requests with no sleep time between requests as illustrated in Throughput (TPS) Decrease as Service Response Time Increase as Measured from the Consumer. Their job is to keep making requests to the server during the test period. Yet, the average response time with 25 users is 5.34 times longer (65,344 milliseconds at 25 users divided by 12,234 milliseconds at 10 users). Consequently, there are fewer opportunities to log results and therefore increase the TPS value.

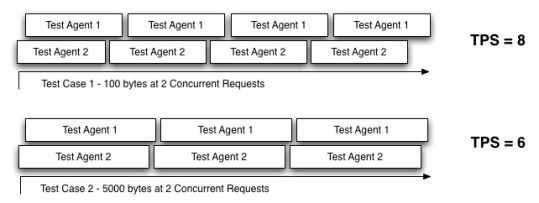

Throughput (TPS) Decrease as Service Response Time Increase as Measured from the Consumer

When a test increases the number of concurrent users, one of three things can happen:

-

The server takes less time (on average) to respond than at lower CRs levels. In this condition, each CR finishes sooner, logs a response (a transaction), and makes its next request much sooner. TPS increases from lower CRs levels

-

The server takes the same time (on average) to respond at lower CRs levels. In this condition, each CR takes the same amount of time but more CRs are running concurrently. TPS goes up from the lower CRs levels proportionately to the CRs’ increase

-

The server takes more time (on average) to respond than at the lower CRs levels. In this condition, each CR finishes later resulting in fewer opportunities for the server to handle more requests. TPS drops proportionately to the increased response time